For two years, we've been told AI is coming for our jobs. Dario Amodei warns of 'hundreds of millions' displaced. McKinsey publishes reports on the 'AI job apocalypse.' Every headline screams about automation eliminating white-collar work.

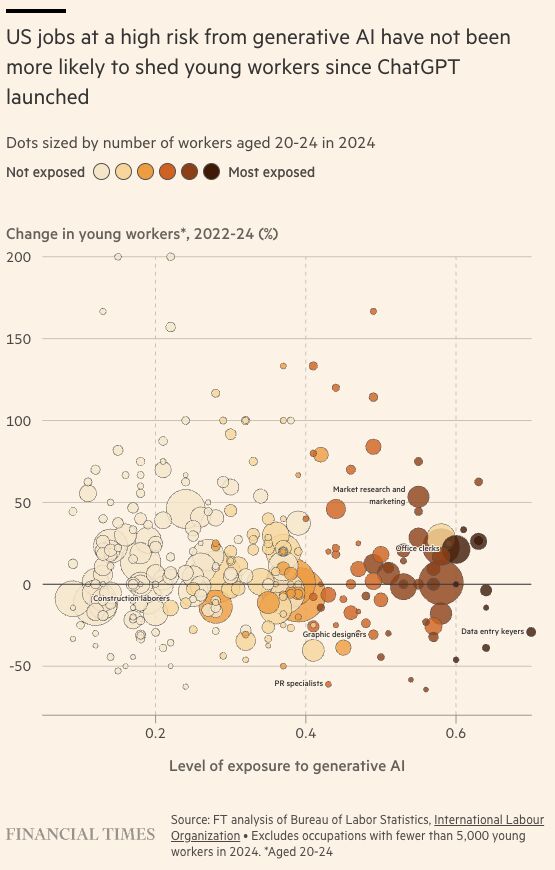

Then I spotted a curious chart in a recent Financial Times article. It showed exactly the opposite of what everyone expected.

We're through the looking glass on AI jobs. Workers in AI-exposed occupations are experiencing faster job growth than everyone else. Not slower. Not disappearing. Growing faster.

The map is upside down: the jobs closest to AI are growing fastest.

But instead, the Financial Times buried this finding in analysis hedged with caveats and explained away with "multiple factors" and "complex dynamics." They somehow missed the implications of their own reporting. Maybe it was post-pandemic corrections, they suggested. Maybe selection bias. Maybe anything except the obvious: maybe the "AI job apocalypse" is bullshit.

We've been here before. ATMs were supposed to eliminate bank tellers - instead banks hired more tellers and opened more branches. Spreadsheets would make accountants obsolete - we got more accountants than ever. Each time, the doomers of their day predicted job destruction. Each time, we got job multiplication instead.

Real jobs disappear - typists, film processors. That's genuinely painful and can be catastrophic for the people affected. But the pattern isn't musical chairs as we know it - it's the opposite. Instead of taking chairs away, we keep adding entire new rooms full of chairs.

This time feels different for many because this time feels personal. Knowledge workers thought they were safe. For many of them, blue-collar automation was someone else's problem. Now Dario Amodei warns that AI could displace "hundreds of millions" of workers, and suddenly everyone's paying attention. McKinsey publishes 27-page reports about the "gen AI paradox" - their term for why AI adoption isn't boosting earnings. Consultants propose elaborate transformation programs to capture productivity gains that refuse to materialise.

Meanwhile, the transformation everyone's looking for is already happening. We're just staring at it backwards.

The confusion stems from three fundamental misunderstandings about how transformational technologies evolve and reshape work. First, we expect to measure productivity gains that have never been measurable during platform transitions. Second, we assume AI agents will replace human workers, when they actually require constant human navigation. Third, we treat AI as a special case when it's following the same platform evolution pattern as every technology before it.

Each misunderstanding compounds the others. Economists can't find productivity gains they were never going to find. Executives plan for autonomous AI that can't actually be autonomous. Policymakers prepare for job displacement while missing the early signals of job multiplication.

The result is a massive misdirection. While everyone argues about whether AI will destroy employment, AI is quietly following the same platform evolution that has historically driven job multiplication. Not through some mysterious force or complex dynamic, but through predictable economic patterns that have driven job growth for centuries.

The only thing mysterious is why we keep acting surprised.

When Gold Rushes Become Boring

We all struggle to imagine anything beyond minor variations of current experience. When we think about AI and jobs, we see today but with robots - machines doing what humans do now, just faster and cheaper. Our brains take the cheap path. We project the present forward and miss over-the-horizon options.

This is why every generation often tends to get technological transformation comically wrong in retrospect. Before spreadsheets, the fear was "computers will replace accountants." The reality? An explosion of financial analysis jobs nobody could imagine when calculation was constrained by human arithmetic speed. Before the internet, the worry was "digital will destroy retail." The reality? E-commerce created entirely new categories of work from logistics coordination to customer experience design.

The pattern repeats because we can't see the capabilities that don't exist yet. Simon Wardley spent decades developing a simple framework that helps us walk over this horizon. His evolution model maps how every successful technology - and many other capabilities - follow the same predictable progression: Genesis (one-off experiments) → Custom (bespoke implementations) → Product (packaged solutions) → Commodity (invisible infrastructure). There’s a common thread of standardisation running through these phases.

Wardley's framework shows us not just where technologies are going, but what becomes possible at each stage. Crucially, you can't skip steps - each stage builds on the last. Edison's custom electrical installations were impressive demonstrations, but they didn't transform the economy. The power grid did - when electricity became commodity infrastructure cheap enough for every factory to use.

Room-sized IBM mainframes were computing marvels, but they didn't create millions of jobs. Cloud computing on the other hand did - when computational power became accessible enough for every startup to build software products that would have required massive infrastructure investments just decades earlier.

AI is racing through the same cycle right now, from OpenAI's research lab to APIs that any developer can integrate over a weekend. And we're hitting the sweet spot - the transition from Product to Commodity where job multiplication explodes.

Here's what's actually happening while everyone argues about consciousness and ‘superintelligence’: AI capabilities are becoming commodity infrastructure. The same pattern I documented in "A Hitchhiker's Guide to the AI Bubble" - cutting-edge capabilities that once required PhD teams and million-dollar budgets are becoming off-the-shelf APIs that any developer can call with a credit card.

The numbers tell the story. OpenAI charged $60 per million tokens for GPT-3 in 2020. Today's equivalent costs $0.07. That's not pricing strategy - that's what happens when revolutionary capabilities become everyday infrastructure.

But this commoditization doesn't just make existing work cheaper. It enables entirely new categories of work that were previously impossible or prohibitively expensive.

Think about what becomes possible when AI capabilities get cheap enough for any organization to deploy. A mid-sized law firm can now afford document analysis that rivals what BigLaw spends millions developing. A local hotel can now provide fluent customer service in dozens of languages - simply impossible before. A manufacturing startup can implement computer vision quality control without recruiting PhD computer scientists from Stanford.

These aren't efficiency improvements within existing job categories. They're capability leaps that create entirely new workflow requirements. But these capabilities don't deploy themselves.

Every organization that suddenly gains access to AI capabilities they couldn't afford before needs people to figure out what those capabilities should actually do. Less how to implement them technically - the commoditization process handles a lot of that - but how to apply them strategically to business problems that previously had no economically viable solution.

This is where platform job creation happens: more in the application layer than the infrastructure layer. When spreadsheets were invented, companies no longer needed to hire programmers to build custom reports. But they hired many more financial analysts who could leverage spreadsheet capabilities to explore investment strategies and risk models that would have been prohibitively expensive to investigate with custom software development.

When content management systems commoditized web publishing, organizations didn't need more developers to hand-code systems that generated HTML. They needed content strategists who could design information architectures and user experiences that were impossible with static websites.

The pattern repeats with AI platforms, but the job categories emerging are more diverse because AI capabilities are more general-purpose. Legal document review specialists who can train and audit AI systems for accuracy and bias. Financial analysts who can design AI-augmented forecasting workflows that incorporate market signals no human could process manually. Customer service managers who can orchestrate human-AI collaboration patterns that deliver personalized support at scale.

These aren't IT jobs with AI tools bolted on. They're domain-specific jobs that exist because AI capabilities made new approaches to old problems economically viable for the first time. The legal specialist isn't just using AI to work faster - they're exploring forms of legal analysis that were impossible when document review was limited by human reading speed.

And yes, we should expect software development jobs to multiply too. When AI coding assistants make programming easier and cheaper, organizations that couldn't afford custom software development suddenly can. It's the Jevons paradox - make something more efficient and you get more demand, not less. Wider roads, more traffic. And with cheaper development we might see organizations swing from buying SaaS to building custom solutions - or conversely an explosion of micro-SaaS for every niche problem that is newly economically viable to solve.

The employment data reflects this platform reality. We're seeing growth in roles that apply AI capabilities to specific business problems - roles that require understanding both the domain and the technology well enough to bridge between them effectively.

Lightcast's analysis of 1.3 billion job postings found that 51% of roles requiring AI skills are now outside IT and computer science, with an 800% surge in generative AI roles across non-tech sectors since 2022. These postings carry a 28% salary premium - the market recognizing the value of domain expertise combined with AI capability.

The growth is happening exactly where you'd expect bridge roles to emerge: marketing and PR roles with AI skills up 50% year-over-year, HR up 66%, finance up 40%. Indeed's data shows implementation-focused roles rising fast - management consultant jobs mentioning generative AI jumped from 0.2% to 12.4% of all AI postings between January 2024 and January 2025.

Microsoft-LinkedIn's Work Trend Index confirms the demand shift: 66% of leaders prefer hiring candidates with AI skills even if less experienced, and non-technical professionals taking AI courses rose 160%

The job growth in AI-exposed sectors that puzzled the Financial Times isn't anomalous. It's platform economics playing out exactly as Wardley's framework predicts. The question isn't whether AI will create jobs - it's how quickly organizations will recognize the opportunity and adapt their hiring to take advantage of capabilities that were economically impossible just a few years ago.

Lotus 1-2-3 Eaters

I used to work in the back office of a large investment bank in the City of London at the dawn of personal computers. We had a team whose job was preparing financial reports for fund managers. These were printed out on giant dot matrix printers strewn all over the place, paper emerging in endless perforated streams that had to be torn off and sorted.

If a fund manager wanted to add a field or change something in a report, there was a work request, development cycle, testing phase - basically a complete software development process that could take weeks. Those requests were queued up for the dev team with constant stakeholder battles over prioritization. The fund managers had infinite appetite for custom analysis, but they were constrained by the cost and complexity of getting anything built.

Then came Lotus 1-2-3 - the dominant spreadsheet before Excel took over - and it changed everything.

Suddenly, the same fund managers who had been rationing their requests for custom reports were building their own sophisticated financial models. They weren't just doing the same analysis faster - they were doing analysis that had never been possible before. Scenario modeling, sensitivity analysis, complex portfolio calculations that would have taken the dev team months to build were now being created over lunch breaks.

The spreadsheet didn't destroy jobs in our department. It exploded them. Within two years, there were more people working on financial analysis than ever before, but they were doing completely different work. Instead of waiting weeks for basic reports, analysts were exploring investment strategies that would have been prohibitively expensive to investigate before. The demand for analysis hadn't been satisfied by faster reports - it had been artificially constrained by the cost of getting them built.

This is how platform technologies work. They don't just make existing work more efficient. They remove constraints that were artificially limiting demand, unleashing appetites that no one knew existed because they had never been economically feasible to satisfy.

The Lotus 1-2-3 pattern is playing out again with AI, but this time the spreadsheet can read, write, and analyze images. When AI tools become accessible enough for domain experts to apply directly - without requiring PhD computer scientists as intermediaries - we get the same demand explosion, but across every knowledge domain simultaneously.

Take legal document review. Traditionally, this meant junior lawyers and paralegals grinding through thousands of documents - expensive, slow work limited by human reading speed. Law firms could only afford thorough review for their biggest cases. Everyone else got the abbreviated version.

Now courts accept AI-assisted review, and the tools do more than just speed up reading. They can synthesize timelines, map communications, and surface patterns across thousands of documents - all at AI-assisted speeds while humans apply judgment for accuracy and defensibility. Suddenly, the kind of deep analysis that was only economically viable for million-dollar cases becomes possible for routine litigation.

It's the same constraint removal we saw with spreadsheets. The demand for deeper legal analysis was always there - it was just economically invisible because serving it was impossible. Remove the constraint, and law firms discover they have massive appetite for investigation they couldn't afford before.

Marketing teams are having their own Lotus moment, testing AI that can analyze consumer sentiment across millions of social media posts in real time. Researchers are using AI to synthesize literature across domains no human could read in a lifetime. Financial analysts are building scenario models that incorporate dozens of real-time market signals simultaneously.

None of this is replacing human work - it's enabling human work that couldn't exist before. The bottleneck limiting organizational insight was the cost and complexity of analysis. Remove that bottleneck, and you don't eliminate the need for human judgment - you multiply demand for human judgment applied at higher levels of leverage.

The early job growth in AI-exposed sectors makes perfect sense once you understand this pattern. Organizations are starting to hire more analysts, researchers, and specialists because AI capabilities make new forms of analysis economically feasible. It's not anomalous job growth - it's job multiplication following the exact same pattern we saw when spreadsheets made financial analysis accessible to every fund manager in London.

The 500-Year Fool's Errand

McKinsey has a 27-page solution for why widespread AI adoption isn't boosting company earnings. They call it the 'gen AI paradox' - apparently unaware that economists have been calling this the 'productivity paradox' since Solow coined the term in 1987. Their diagnosis: firms need CEO-scale rewiring and enterprise transformation programs to capture productivity gains that refuse to materialize.

This is spectacularly wrong advice built on a fundamental misunderstanding of how platform transitions work.

McKinsey treats weak near-term productivity data as proof that firms must scale harder - erecting agentic meshes and rewiring at enterprise scope. That's exactly backwards for a platform transition whose gains arrive through work reorganization that standard metrics systematically miss. Building a CEO transformation program around short-term productivity numbers isn't rigour - it's a category error with a large price tag.

Here's what McKinsey missed: there is no paradox. Solow already explained this in 1987: "You can see the computer age everywhere but in the productivity statistics." Despite computers obviously transforming individual work - enabling analysis that would have taken weeks to be completed in hours - aggregate productivity data showed no clear gains for decades. This same "productivity paradox" persisted through the 1980s and early 1990s, even as anyone using computers could feel their transformative impact.

The reason you can't see AI productivity gains in company P&L statements is the same reason economists struggled for decades to measure computer productivity, and why it took 560 years to quantify the printing press's economic impact. The yardstick moves.

The pattern goes back centuries. Recent research by Jeremiah Dittmar found that cities with printing presses in the late 1400s grew 60% faster than cities without them. But this research was published in 2011 - over 560 years after the technology's introduction. Economists had struggled for centuries to find macroeconomic evidence of the printing press's impact, despite its obvious role in enabling the Scientific Revolution, the Reformation, and mass literacy.

Why do productivity measurements break down during technological transitions? Because transformational technologies don't just make existing work more efficient - they reorganise everything around new capabilities.

In my old banking job, we reconciled trading positions using pen, paper, and desktop calculators. The investment bank had mainframes, but office PCs didn't exist yet. When I try to imagine doing today's work with pen and paper - how long would current tasks take? Can I do a finger in the air estimate of the productivity gain I personally get from a computer? Do I even allow a desktop calculator into this thought experiment, or do I have to excavate long division? - the question becomes impossible to answer. All our work is now moulded around computer capabilities. For most tasks, there is simply no pen-and-paper analog to be measured.

This is why productivity measurement breaks down during technological transitions. By the time economists figure out how to measure the gains, everyone's doing completely different jobs. Job titles change, processes get redesigned, expectations shift to accommodate capabilities that didn't exist before. Workers aren't doing the same tasks faster - they're doing fundamentally different work.

The yardstick moves. In the early years, firms spend money on data, software, and process redesign that accounting treats as expenses. Meanwhile, workflows get rebuilt and people figure out how to use the new tools. Firms look less productive while they're in the middle of reorganizing everything. Then the gains show up later, after everyone's forgotten what the old way looked like - economists call this the J-curve.

McKinsey's approach shows exactly how productivity obsession leads to terrible advice. Their big claims - "estimated impact... 60-90 percent" and "80 percent of level 1 incidents resolved automatically" - are made-up scenarios, not real results from companies that actually tried this stuff. Yet they're telling CEOs to rewire everything based on slideware maths while admitting that "recurring costs can exceed the initial build investment" - with no numbers showing how any of this pays for itself.

If you expense the build, ignore the workflow reorganisation happening during transition, and average the gains, you will undercount a platform shift by design - and you will buy the wrong transformation.

McKinsey's own language betrays their misunderstanding: "Gen AI is everywhere - except in company P&L." They're selling expensive solutions to a measurement problem that's existed for 500 years. The transformation they're looking for is already happening. They just can't see it because they're staring at the wrong numbers.

We're not witnessing a productivity paradox. We're witnessing the predictable measurement confusion that occurs when the yardstick moves.

Why AI Layoffs Hand Market Share to Competitors

The layoffs are real and brutal. Over 300,000 tech workers lost their jobs in 2023 and 2024 - numbers approaching dot-com crash levels. Every headline screams about AI automation eliminating positions. Yet the data shows AI-exposed jobs growing, not shrinking.

So what's going on?

We're watching two different things happen at once: companies cutting specific jobs while the economy creates new ones. It's not contradictory - it's reorganization.

Companies aren't swapping humans for software. They're rebuilding how work gets done while figuring out which roles actually matter. When Dropbox talks about "repositioning for the AI era" or IBM pauses hiring for back-office work, they're making bets about what their organizations will look like in two years.

"those who make half a revolution only dig their own graves"

The maths is simple but gets buried in the drama. If AI doubles your team's output and you can sell the extra output, firing people means handing market share to competitors who keep their teams and scale up. As the old saying goes - "those who make half a revolution only dig their own graves". Cuts only make sense when demand is capped - thin sales pipelines, maxed distribution, regulated pricing, or business models that are fundamentally changing.

That's why responses vary so wildly. Some companies face genuine demand constraints where AI productivity means fewer people. Others are in growth mode where AI lets them tackle workloads that were impossible before - with bigger teams, not smaller ones.

As far as headlines go: the cuts are visible and dramatic. Mass layoffs get press releases. The new hiring is scattered across thousands of companies and doesn't get labeled "AI jobs" in the statistics. It just looks like normal hiring, except the job descriptions now mention AI skills.

This also explains why companies are sitting on record cash reserves - roughly $7.55 trillion among US non-financial corporations. Whether it's uncertainty about autonomous agents, post-COVID caution, or broader economic risks, they're keeping their powder dry during a major transition.

Companies tend to move in three steps: automate routine tasks, pilot AI to assist complex work, then rewire end-to-end workflows around the tech. We're between steps two and three. The routine wins already happened and show up in some layoff announcements. Large-scale workflow redesign is still experimental, so the hiring shows up in scattered job postings rather than clean headcount data.

You can see this stabilization starting in sectors that moved first. Law firms are building legal-tech specialist teams. Hospitals are launching ambient-AI and clinical AI programs. Banks are hiring gen-AI enablement leaders for their revenue businesses. These aren't one-for-one replacements - they're entirely new job categories.

This 'Great Reorganization' looks chaotic and painful - and of course it is for people losing their jobs especially. But the underlying pattern points toward job multiplication, not elimination. The transition is messy, but the economics favour human-AI collaboration over pure automation.

Three Kinds of Survivors

The employment data tells only half the story. Yes, AI-exposed jobs are growing rather than shrinking. But the growth isn't uniform - it's creating a fundamental split in how knowledge work gets organized.

Three distinct categories are emerging from this reorganization:

Navigation Experts have deep understanding of what constitutes good versus bad output in their field. I've called these "quality maps" - the mental models that let you spot when something looks plausible but is actually wrong. A senior legal researcher who can tell the difference between a compelling but legally flawed argument and a mundane but precedent-solid one. A financial analyst who knows when algorithmic trading signals deserve investigation versus dismissal.

These professionals become more valuable, not less, because their judgment scales across AI-generated possibilities they could never explore manually. While the AI can generate possibilities all day, it can't judge quality. Someone has to steer.

Amplification Natives grew up inside AI-augmented workflows and developed entirely new approaches to knowledge work. They're comfortable with iterative refinement and prompt wrangling. The junior developers who were earliest adopters of "vibe-driven development" and spec-first coding, forming communities around these AI-native approaches. Content creators who think in terms of AI-assisted ideation and human-guided curation.

Unlike traditional workers adapting AI tools to existing processes, they've designed their cognitive habits around AI capabilities from the start. These workers execute faster than traditional experts because they've internalized AI-native workflows rather than bolting AI onto legacy approaches.

Integrators and Governors represent the operational layer that makes AI reliable at scale. They handle data plumbing, evaluation systems, monitoring, compliance, and change management - the unglamorous infrastructure that turns impressive demos into dependable business processes. The accountants who become AI audit specialists. The project managers who evolve into AI workflow coordinators. The quality assurance professionals who specialize in human-AI collaboration protocols.

This is where many traditional mid-tier workers can land if they reskill effectively.

This Amp Goes Up to 11

The displacement isn't vertical - AI doesn't simply replace "lower skill" with "higher skill" work. It's lateral. Within every cognitive domain, workers who can navigate AI capabilities effectively are outcompeting workers who cannot, regardless of qualifications or experience levels. A junior analyst who's learned to pair AI research with domain-specific judgment can outperform a senior analyst who treats AI as an enhanced search engine.

This isn't elevation to some utopian "strategic work." It's amplification to maximum intensity. The AI doesn't make work easier - it turns the volume up to eleven. Everyone's working louder, not smarter, pushing harder against new limits instead of old ones.

This explains the data. Yes, there are brutal layoffs happening - but they're not because of AI. Post-COVID corrections, funding dry-ups, companies figuring out what they actually need. Meanwhile, AI-exposed sectors are quietly growing because AI makes analysis, content creation, and research cheap enough that organizations can afford way more of it.

Conclusion

"Well! I've often seen a cat without a grin, but a grin without a cat! It's the most curious thing I ever saw in all my life!" - Alice

We're having a furious debate about something that's already disappearing. The "AI job crisis" is like the Cheshire Cat - by the time we finish arguing whether it exists, only the grin will remain.

While economists scramble to measure productivity gains that transformational technologies have never shown clearly during transitions, while policymakers prepare for mass displacement that platform evolution makes unlikely, consultants sell transformation programs to manage changes that are unfolding naturally according to predictable patterns.

AI capabilities are commoditizing into platform infrastructure through the same evolution that has governed every major technology transition. The whole productivity measurement exercise is cargo cult economics - economists pretending they can quantify something that's never been quantifiable.

The job market is restructuring around AI amplification, creating new categories of work faster than old ones disappear.

What started as one puzzling chart has become overwhelming evidence. Data from PwC, Lightcast, and multiple other sources all show the same pattern - job growth in AI-exposed sectors, wage premiums for AI skills, expansion beyond tech industries. This isn't an anomaly. It's platform job multiplication at scale.

The brutal layoffs are real, but they reflect competitive reorganization within the platform transition, not mass replacement by autonomous systems. The net effect over time is job growth because AI enables organizations to tackle workloads that were previously economically impossible.

By the time this restructuring becomes fully visible in employment statistics, AI-augmented collaboration will be so normal, so invisible, that our children won't understand what we were so worried about. They won't debate whether "computers create jobs." They'll pilot cognitive exoskeletons the same way we use spreadsheets: as unremarkable infrastructure for getting work done.

The transformation isn't coming. It's here. But it's invisible to economists obsessed with productivity metrics, while becoming so normal for workers that we're forgetting how transformative it actually is. We're not debating whether AI will change work - we're already working differently and calling it ordinary.