Why the dream of AGI rests on undiscovered mathematics, biochemical hand-waving, and Silicon Valley's accidental religion.

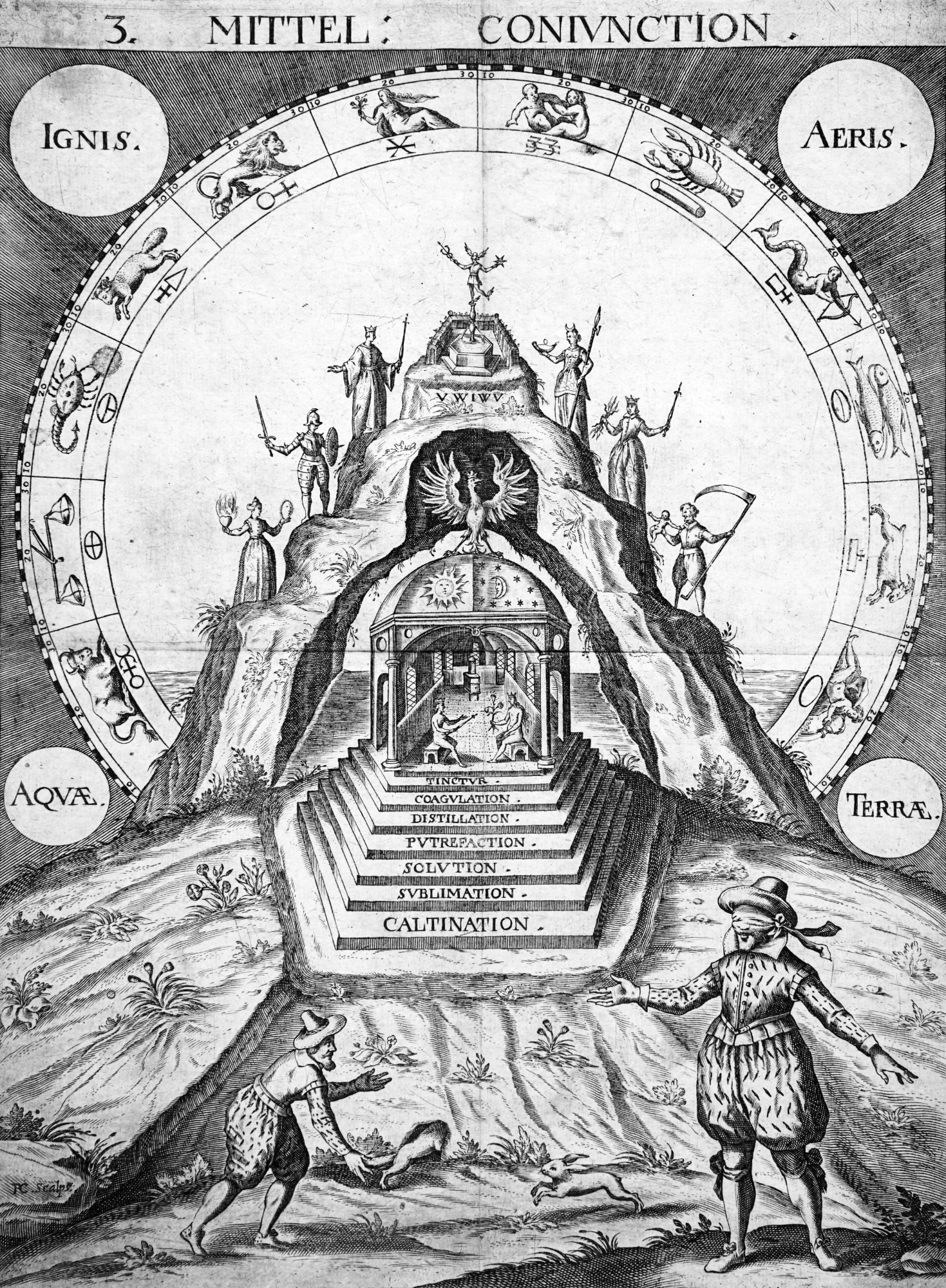

Isaac Newton spent more time on alchemy than physics.

The man who gave us calculus, who explained gravity, who literally invented modern science - he spent decades trying to turn lead into gold. He wrote over a million words on alchemy, conducted thousands of experiments, built furnaces in his Cambridge rooms. He was convinced the secret was there, just out of reach.

He wasn't alone. Robert Boyle, the father of chemistry. Tycho Brahe, who mapped the stars. Even Leibniz dabbled. The brightest minds of the Scientific Revolution believed that with enough intelligence, enough effort, enough faith, they could crack the code of transmutation.

They never made an ounce of gold.

In a previous article, I called AGI "alchemy-level nonsense." The response was predictable: "You're looking at the wrong timescale." "It's not about 5 years, it's about 50." "These are the smartest people in tech - give them time."

But I'm not talking about timescales. I'm not saying AGI is hard, or that it will take longer than expected. I'm saying it's impossible. Not 10 years impossible. Not 100 years impossible. Never-ever impossible. Lead-into-gold impossible.

I say this because years ago, Dr. Roman Belavkin - a researcher in cognitive science at Middlesex University - explained to me why we currently can't even model a single biological neuron, and it is unknown whether we shall ever be able to. The maths doesn't exist yet. When I've mentioned this to other AI enthusiasts, they dismiss it as 'just another challenge to overcome’.

So let me share what mathematicians who study cognitive systems have been trying to tell us. Why the smartest people in tech are chasing something that violates the constraints of current mathematics and biology. Why all the compute in the world won't help, any more than better furnaces would have helped Newton make gold.

The parallels run deeper than metaphor. Just like the alchemists, today's AGI seekers are brilliant, dedicated, and completely wrong about what's possible. But also like the alchemists, they're building something valuable while chasing the impossible.

Alchemists Were Actually Brilliant

We mock medieval alchemists now, but they weren't fools. For over two thousand years, alchemy attracted the greatest minds in science. Newton spent decades on it. Boyle, the father of chemistry. Jabir ibn Hayyan, the Islamic polymath. Chinese emperors employed thousands of alchemists. This wasn't fringe science - it was science.

They were empiricists working from observable facts. Gold doesn't rust. Neither does silver. But iron does. Lead is soft and heavy. So is gold. Mercury is liquid metal that dissolves other metals. Surely these shared properties meant metals were related - variations of some primary substance that could be transmuted with the right process?

This wasn't magical thinking. It was rational inference from available evidence. They could alloy metals, change their properties with heat, dissolve and precipitate them. They watched caterpillars become butterflies. Why not lead into gold?

The social dynamics helped. European princes needed gold to fund wars. Islamic scholars sought the elixir of life. Chinese emperors wanted immortality. Every civilization, every power structure, had reasons to fund the dream. For centuries, the smartest people alive, backed by unlimited resources, chased transformation.

And it worked - just not how they expected. Alchemists invented distillation, crystallization, sublimation. They discovered phosphorus while trying to extract life force from urine. They mapped acids and bases while seeking universal solvents. They created gunpowder while chasing immortality.

The dream sustained itself through a paradox: every failure taught them something useful. Can't make gold? Here's how to purify silver instead. Can't find the elixir of life? Here's how to make better medicines. The impossible goal funded the possible discoveries.

By the time chemistry emerged as a real science, alchemists had built the entire laboratory tradition. The equipment, techniques, and methodology that would reveal why transmutation was impossible came from centuries of trying to achieve it.

The alchemists had better evidence for their beliefs than AGI proponents today have for theirs. They could see metals shared properties. They could demonstrate partial transformations. They had thousands of years of metallurgy suggesting malleability.

What evidence do we have that silicon can become conscious? That backpropagation - which requires smooth, differentiable functions - can somehow model neurons that are definitionally non-differentiable? That pattern matching in text can become understanding?

The alchemists at least started with lead and gold - two things that actually exist. We're starting with statistics and consciousness - and one of those might not even be a thing.

The Puddle Wakes Up

Douglas Adams once wrote about a puddle that wakes up one morning and thinks: "This is an interesting world I find myself in - an interesting hole I find myself in - fits me rather neatly, doesn't it? In fact it fits me staggeringly well, must have been made to have me in it!"

The puddle is, of course, exactly wrong. The hole wasn't made for water. Water just takes the shape of whatever contains it. But from the puddle's perspective, the fit seems too perfect to be coincidence.

This is Silicon Valley's relationship with intelligence. We've built machines that process text, and now we think intelligence is text-shaped. We've created systems that recognize patterns, so we believe consciousness is pattern recognition. The fit seems too perfect to be coincidence.

Robert Epstein, a cognitive scientist, puts it bluntly: "Your brain is not a computer." We've been seduced by our own metaphor. Brains don't store memories like files. They don't process information like CPUs. They don't run algorithms. The computational metaphor was useful once, but we've forgotten it's a metaphor.

Yet here we are, building a religion around it. And I mean that literally. AGI serves the same psychological function as religion: it promises transcendence, meaning, purpose. It offers Silicon Valley a secular rapture where we upload our consciousness and defeat death.

Look at the language. "The Singularity." "Alignment." "Existential risk." These aren't technical terms - they're theological concepts. We have prophets (Kurzweil), apostates (Hinton), and heretics (anyone who doubts). We have sacred texts (Turing and Emil Post's papers), origin myths (the Dartmouth Conference), and an eschaton (AGI).

The social dynamics are identical to religious movements. True believers get funded and platformed. Skeptics are dismissed as "not understanding exponential growth" - the tech equivalent of lacking faith. Every failed prediction gets memory-holed while every incremental advance proves the prophecy.

The puddle thinks the hole was made for it, wrongly projecting its shape onto reality. We think intelligence is computational.

Which brings us to the mathematics. Because reality - particularly biological reality - has a very specific shape. And it's not differentiable.

Mathematics of Impossible Things

Scientists recently mapped one cubic millimeter of human brain tissue - a piece the size of a grain of sand. It took 1.4 petabytes of data. Not to simulate it. Not to model it. Just to store a static 3D photograph.

One grain of sand worth of brain tissue. Frozen. Dead. Not even trying to capture how it works - just what it looks like. And it takes more data than Netflix uses to stream to a small country.

Inside that grain? 57,000 cells. 150 million synapses. Each synapse is a chemical factory with over 1,000 different proteins. Each protein changes shape and function based on what's happening around it. And that's just the photograph. The frozen moment. To model how it actually works? We don't even know where to start.

This is where the dream of artificial general intelligence hits a wall that nobody talks about. Years ago, I was discussing AGI with my friend Dr. Roman Belavkin - a researcher in cognitive science at Middlesex University. Over coffee, he explained the problems with modeling biological neurons that should be headline news but instead stay buried in academic conferences.

I needed to understand: were these problems speed bumps or brick walls?

The more Roman explained, the clearer it became to me. These aren't speed bumps. They're mathematical voids.

The problem starts with backpropagation.

Let me explain what that means, because it's the heart of why AGI is impossible with current mathematics.

Most deep learning systems today - ChatGPT, Claude, Gemini - rely on an algorithm called backpropagation. Think of it like teaching a child: you show them a picture of a cat, they guess "dog," you tell them how wrong they were, and they adjust their mental model. Do this millions of times and they learn to recognize cats.

The 1943 Problem

Why don't we just model real neurons? While we can model neurons at many fidelities - the best are still cartoon sketches. The original McCulloch-Pitts model from 1943 used simple on/off switches with step functions - not differentiable, couldn't work with backpropagation (which hadn't been invented yet). In the 1980s, to make backpropagation work, we replaced the step functions with smooth curves. But we're still using the same basic framework: weighted sums, linear algebra, one-dimensional signals.

If we tried to model biological detail - the neurotransmitters, the dendritic processing, the living cellular machinery - we don't even have the mathematics to describe it, let alone compute it. A single biological neuron isn't a switch - it's a living cell, a chemical factory floating in a soup of neurotransmitters, hormones, and proteins.

When a signal arrives at a neuron, it doesn't just flow through like electricity through a wire. The signal arrives at branches called dendrites, and here's where everything breaks: each branch does its own processing. Not simple addition or multiplication - complex, unpredictable transformations that we struggle to describe mathematically.

Imagine you're trying to predict the flow of a river. But this river has thousands of tributaries, and each tributary follows its own rules - some flow uphill, some disappear underground and reappear elsewhere, some spontaneously change direction based on the phase of the moon. Now try to write an equation for where a drop of water will end up. That's what we're trying to do with neurons.

Two Killers

Roman described a number of fundamental issues with current approaches to AGI. As I understood them, these aren't engineering challenges - they're mathematical impasses. I’ll cover two of them here:

First: Chemistry that doesn't exist in AI

There are no neurotransmitters in artificial neural networks. None. Zero. Not dopamine, serotonin, GABA, glutamate - nothing. We're missing the entire chemical dimension of neural signaling.

In real brains, dozens of different neurotransmitters carry signals between neurons. Each synapse responds to a specific neurotransmitter, and a single neuron has receptors for many different types. Meanwhile, our AI models treat all synapses as identical - just different weights on the same type of signal.

As Roman explained, these neurotransmitters aren't just signals - they're dimensions. To model this would require multilinear (tensor) algebra, not the simple linear algebra we use. IBM is trying to invent "tensor-tensor algebra" because current maths literally cannot describe these relationships. Think about what that means. We need mathematics that doesn't exist yet.

Second: The frozen brain problem

Brains don't train then deploy. Every perception changes the brain perceiving it. Every thought rewrites the apparatus that produced it. Learning and performing happen simultaneously, continuously, inseparably.

Our models can't do this. Not won't - can't. Real plasticity means the architecture itself reorganizes with every input. The connections don't just change weights - they form, die, restructure. We don't have mathematics for systems that fundamentally rewrite themselves while running.

The current state of AI, Roman explained, is like "putting wires into a dead brain and trying to get answers." The network has been trained, its weights are frozen. What you're talking to is a fossil of the training process.

Yes, they're bolting on RAG systems and calling it "continuous learning," but that's like taping a notebook to a statue and calling it alive.

Neurons are living cells. If life-like processes are prerequisite to general intelligence, we currently lack a mathematical definition of 'being alive,' let alone an engineering analogue. That's a pre-theoretic gap, not a compute gap.

Other Problems Stack Up

As if those two killers weren't enough:

Real neurons spike - sudden, all-or-nothing electrical pulses. Researchers use 'surrogate gradients' to work around this, but these workarounds don't capture the actual dynamics.

Glial cells - which we ignored for a century - actively shape how neurons communicate, release their own chemicals, and outnumber neurons in some brain regions. We're missing more than half the system.

An Impossible Engineering Problem

The optimists have their responses, of course.

"We don't need biological fidelity," they say. "Airplanes don't flap their wings."

True. But humans had been studying flight systematically for centuries - Leonardo da Vinci's Codex on the Flight of Birds dates to 1505. By the early 1800s, George Cayley had established the fundamental principles: lift, drag, thrust, weight. The Wright brothers weren't discovering new science; they were solving engineering problems with materials and engines. They understood the physics and could define what flight meant.

And here's the deeper problem: if you're not modeling biological intelligence, what exactly are you modeling? The brain is the only example we have of intelligence. There's no other data point. When you abandon biological fidelity, you're not building toward intelligence - you're building something else entirely and hoping it turns out intelligent.

When cornered, suddenly everything is "intelligent" - plants, fungi, ant colonies, flocks of birds. But when pitching to investors and governments, AGI means "surpassing humans at all cognitive tasks." Ask an AGI researcher: what's the function you're optimizing? What principles of intelligence have you extracted? They'll give you extrapolations, not specifications. You can't engineer your way to a destination you can't define.

"Intelligence will emerge at scale," they insist.

Yes, scale helped with translation. But translation is pattern matching between texts. Emergence isn't magic. What rule of matrix multiplication creates consciousness? Which property of transformer architectures generates understanding? They can't tell you because they don't know. Believing scale will create consciousness is like believing a tall enough ladder gets you to the moon.

"But Turing proved computation is universal."

Yes, any computable function can be computed. But what's the function that takes sensory input and outputs consciousness? Nobody knows. We can't even say whether the brain is computing in Turing's sense.

Iris van Rooij and colleagues at Radboud argue that even with unlimited compute, scaling current approaches doesn't get you to AGI. It's not a scale problem. It's an architecture problem. A mathematics problem. A we-don't-even-know-what-we-don't-know problem.

The very success of current AI makes the problem worse. ChatGPT is so good at mimicking understanding that people think it actually understands. It's like being impressed by a parrot reciting Shakespeare and concluding the parrot must understand iambic pentameter.

But watch what happens when these systems fail. They don't fail like humans fail - forgetting a detail or mixing up names. They fail in ways that reveal the complete absence of understanding. They'll invent legal citations that sound perfect but never existed, getting lawyers sanctioned. They'll confidently explain historical events that never happened. They'll maintain perfect grammar while contradicting themselves from one sentence to the next. Not mistakes - glitches that show there's no coherent world model underneath.

Change a few pixels in an image - invisible to humans - and suddenly the AI thinks a panda is a school bus. Why? Because it's not seeing a panda. It's detecting statistical patterns that happen to correlate with the label "panda" in its training data. The patterns are brittle, hollow, nothing like understanding.

A Note on "Impossible"

"But you can't prove it's impossible!"

True. Scientists can't prove gravity won't reverse tomorrow either. Yet nobody's funding anti-gravity startups. Nobody's spending $400 billion trying to reverse Earth's gravitational field. The burden of proof somehow only goes one way in AI.

When I say AGI is impossible, I mean: it requires mathematics that doesn't exist to model biology we don't understand to implement functions nobody can define. That's "impossible" in any practical sense.

For investors, policymakers, and journalists: when the timeline is "somewhere between tomorrow and never," bet on never. When the requirement is "undiscovered mathematics," that's not a roadmap, it's a prayer.

The honest position isn't hedging about possibilities. It's admitting that AGI is impossible until proven otherwise. And the proof requires showing the mathematics first, not promising to discover it later.

What Would Change My Mind

Show me:

- The tensor mathematics for modeling neurotransmitter interactions

- A system that actually learns while running - architecture changing with input, not RAG or fine-tuning

- The mathematical function that produces consciousness from sensory input

- A definition of intelligence you're building toward that isn't circular

Not promises. Not roadmaps. Not "we're seeing sparks." Working mathematics and clear definitions.

The Biological Mimicry Trap

You’ll often hear a reasonable-sounding objection: “We’ve beaten nature plenty of times without copying how it works.”

And sure - calculators don’t use neurons, just silicon. Wheels outperform legs. Engines outperform muscles. Submarines don’t have gills. Planes don’t flap their wings.

We’ve also copied nature when it suited us: velcro from burrs, sonar from bats, drugs from plants. But in every case - whether we mimicked biology or not - we understood the principles first.

We knew how arithmetic worked. We understood lift and drag. Even the stuff we borrowed from biology made sense mechanistically before it scaled.

That’s what makes AI different.

The only part of the field claiming real progress toward AGI is built on neuron-inspired, gradient-trained architectures. OpenAI, Anthropic, DeepMind - they’re all in on neural networks, betting that scaling these artificial neurons will somehow give rise to intelligence.

But when pressed on how biologically plausible any of this is, they retreat.

OpenAI, whose entire stack is built on artificial neurons, now defines AGI as “a highly autonomous system that outperforms humans at most economically valuable work.”

Ray Kurzweil, long time prophet of brain modeling, says AGI just needs to “match what an expert in every field can do, all at the same time.”

This is bait-and-switch. They lean on biology to justify the architecture - then invoke functional definitions to escape scrutiny - while continuing to pour everything into the neuron-modeling path. No mechanisms. No theory. No alternative.

And if artificial neurons can’t get us there - which they can’t, because the mathematics to model real ones doesn’t exist and may never exist - there’s no Plan B. Scaling and scaffolding aren’t a theory. You don’t get to bet everything on neuron mimicry while claiming the biology doesn’t matter.

If mimicry is the path, show the biology. If it’s not, show your theory. Right now, we’ve got neither. Just metaphors with a GPU budget.

The Better Furnace Fallacy

The alchemists kept building better furnaces.

Each generation convinced themselves they were making progress. Hotter temperatures, purer materials, more precise measurements. The furnaces of 1400 were primitive compared to those of 1600. Surely they were getting closer to transmutation?

They weren't. Not one percent closer. Not one thousandth of a percent closer. Zero percent closer. Because transmutation requires nuclear physics, not better heating.

Today's AI researchers are building better furnaces. They call them GPUs.

GPT-3 had 175 billion parameters. GPT-4 has over a trillion. The training runs cost millions, then tens of millions, now hundreds of millions. OpenAI's latest cluster has 100,000 GPUs. Microsoft and Google are planning million-GPU clusters. The furnaces keep getting bigger.

Sam Altman wants $7 trillion for compute. Seven trillion dollars of furnaces. He might as well be asking for $7 trillion to build a furnace that reaches the sun's core temperature. It still won't make gold.

This is the most expensive category error in history.

We're Not "On The Way"

Here's what the AI establishment doesn't want to admit: we're not "on the way" to AGI. We're not even on a path.

Being "on the way" implies you're traveling toward a destination. It implies progress, direction, movement toward a goal. But you can't be on the way to a place that requires different physics.

The Guardian recently quoted tech analyst Benedict Evans calling the entire AGI race "vibes-based." Aaron Rosenberg from Radical Ventures immediately redefined AGI as "80th percentile human-level performance in 80% of economically relevant digital tasks." Watch that goalpost move. When the furnace-builders realize they can't make gold, they'll announce they were always trying to make bronze.

The Compute Cult

The deep learning revolution created a dangerous syllogism:

1. We scaled compute and got better language models

2. Better language models seem more intelligent

3. Therefore, more compute equals more intelligence

This is like observing:

1. We made furnaces hotter and could melt more metals

2. Melting metals seems closer to making gold

3. Therefore, hotter furnaces equal transmutation

The leap doesn't follow. But once you've invested billions in furnaces, it's hard to admit you're not actually making progress toward gold.

Nvidia isn't selling GPUs. They're selling furnaces to alchemists. And business is booming - their market cap hit $3 trillion on furnace sales alone. Every AI lab, every tech giant, every government is panic-buying furnaces, terrified of being left behind in the transmutation race.

This year alone, according to the Guardian, tech companies will spend $400 billion on AI infrastructure. Not on research into the missing mathematics. Not on understanding biological neurons. On compute. On bigger furnaces.

That's more than the EU's entire defense budget. More than the GDP of many nations. All spent on the assumption that if we just make the furnaces hot enough, consciousness will emerge.

The Human Factor

A recent exchange crystallized this for me. When I mentioned AGI's impossibility, someone immediately defended the field's luminaries: "Hinton, Tegmark, and Bostrom aren't fools or shills."

Not fools or shills. Hinton revolutionized neural networks - brilliant work, just not neuroscience. Tegmark is an accomplished physicist - but this isn't physics. Bostrom... well, the less said the better.

The point is: Hinton understands his mathematical abstractions perfectly. But the gap between those abstractions and biological reality? That's where the impossibility lives.

This isn't conspiracy. It's specialization. The AI researchers perfecting backpropagation don't study molecular neuroscience. The neuroscientists mapping synapses don't design learning algorithms. The funders reading executive summaries don't see the chasm between fields.

Nobody has to lie. Everyone just has to stay in their lane.

I once met an architect for one of the early UK nuclear power plants. She told me the architectural team had raised concerns about nuclear waste from the start. They were told not to worry - the scientists were working on it and would have it solved by the time it mattered.

That was over sixty years ago. The waste is still there. The solution never came.

Same pattern, different field. Everyone assumes someone else has the hard part covered. The architects trusted the physicists. The physicists trusted future physicists. Nobody was lying. Everyone was just wrong about what was possible and assumed that difficult just meant 'not yet.'

The field self-selects for optimists - people who believe artificial intelligence is possible, who want to be the ones to crack it. You don't go into AI research if you think it's impossible. You go into AI research because you dream of building minds. So the very people who might spot the fundamental problems are filtered out before they start.

Geoffrey Hinton, the "Godfather of AI," told Wired: "We really don't know how [deep neural networks] work." He says their success "works much better than it has any right to" - an empirical discovery that theory can't explain. Yoshua Bengio acknowledges that current systems learn in a "very narrow way" and make "stupid mistakes," lacking the mathematical foundations for reasoning or causal understanding. Yann LeCun admits nobody has "a good answer" for how to make neural networks capable of complex reasoning.

In 2017, Google researcher Ali Rahimi called the entire field "alchemy" - techniques that work without understanding why. The community erupted in debate, but the core point stood: we have amazing tools with no satisfying theory.

But somehow, when you put them in a room together, the specialization creates blind spots. They're all brilliant people climbing different faces of an impossible mountain, each assuming someone else has found the route to the top.

A commenter on Hacker News captured today's version perfectly: "If you believe the brain is a biological computer and AI computing keeps advancing, at some point it will be able to do the same stuff. It's just common sense."

Common sense. The same common sense that told us heavier objects fall faster. That the sun orbits the earth. That time flows the same everywhere. Common sense is what we believe before we do the science.

The science says: we can't model biological neurons. We lack the mathematics. We're not even wrong - we're pre-wrong. We don't know enough to be wrong correctly.

But careers depend on not seeing this. Grants require optimism. Stock prices need growth stories. Nations need to believe they're not falling behind in the "AI race."

So the impossible gets repackaged as inevitable. "Just a matter of time." "Engineering challenge." "Scaling problem." Anything but "mathematically impossible with current approaches."

Smart people believe in smartness. Give them enough time, enough resources, enough brilliant minds, and surely any problem yields. It's hubris dressed as optimism.

Playing the Reckoning

The strategic implications are worth examining.

The indicators are already visible if you know where to look.

Watch the language in earnings calls. "AGI" is quietly becoming "advanced AI." "Human-level intelligence" morphs into "human-level performance on specific tasks." The promises get vaguer, the timelines longer, the caveats more prominent.

Some companies are already pivoting. They're hiring fewer consciousness researchers, more product engineers. The job postings talk less about "solving intelligence" and more about "vertical applications." Money is moving from moonshots to market share.

But here's the twist: the bubble might not pop - it might deflate.

The infrastructure is already too big to fail. Governments have made AI a strategic priority. The GPU clusters exist. The talent is hired. The integrations are built. Just like the dot-com bubble left us fiber optic cables and data centers, this bubble is creating real infrastructure.

The reckoning might be a gradual admission, not a crash. The $560 billion doesn't evaporate - it gets rebranded. The consciousness researchers become product developers. The moonshot becomes the moon landing we already achieved: really good pattern matching at scale.

This creates different opportunities. Not disaster management, but transition management. The challenge is nuanced: preserve the real technical achievements, satisfy investor expectations, all while quietly abandoning the consciousness narrative.

OpenAI admits their latest model is "missing something quite important, many things quite important" (and that’s putting it mildly). But they raised money at a $500 billion valuation anyway. Investors are hedging - buying both the AGI dream and the automation reality, pretending they're the same thing..

Watch for these signals:

- Research focus shifts from consciousness to optimization

- AGI gets quietly downgraded while timelines stretch from 5 years to 10 to "the coming decades"

- Job postings emphasize "ML engineering" over "AGI research"

- Conference talks pivot from theory to applications

Whether it pops or deflates, the winners will be those who understood early that ML infrastructure is the real prize. They'll inherit an entire ecosystem built on someone else's impossible dream.

A Parlour Trick in Real Time

As I wrote this, Google announced Genie 3 as their "latest step towards AGI." It's a world simulator - creates virtual ski slopes and warehouses. Impressive, yes. A step toward consciousness? No more than SimCity was in 1989.

When GPT-5 dropped last week we saw the same framing. Genuinely useful, nothing to do with consciousness, marketed as progress toward AGI. (I originally wrote this paragraph the week before it was released and only had to change the tense.)

Notice what they never share: a roadmap. Not because it's secret - because it doesn't exist. 'We're seeing sparks of AGI' translates to 'we're wandering and found something interesting.'

Even Eric Schmidt, with more access to AI labs than almost anyone, recently said digital superintelligence is coming 'within 10 years' - then immediately hedged that Silicon Valley timelines are usually 'off by one and a half or two times.' When the former Google CEO can't pin down whether it's 10 years or 20, you know they're not following a roadmap - they're making educated guesses about undiscovered breakthroughs.

Schmidt imagines we'll have 'Einstein and da Vinci in your pocket.' Always Einstein, never Marvin the Paranoid Android. Never your dullest coworker, but immortal. Never a consciousness that's mediocre, depressed, or obsessed with collecting stamps. The reflexive reach for genius-as-product shows they haven't thought through what they're building - just copied the brochure words. Why would artificial consciousness be Einstein rather than utterly banal? They can't say, because they've never questioned the sales pitch. The fact that the former Google CEO can't imagine AGI being boring shows how much this is marketing rather than engineering.

You can't build toward Einstein when you can't define intelligence, can't specify consciousness, can't even model the neurons you claim to be replicating.

Conclusion

When the AGI dream finally dies, it won't go quietly.

There will be congressional hearings about the $560 billion. Careers built on consciousness promises will evaporate. The true believers will splinter into camps: those who claim we were "almost there," those who pivot to quantum computing, and those who quietly update their LinkedIn to emphasize "practical AI solutions."

But watch what survives the reckoning.

Every ambitious failure leaves gifts. The alchemists never made gold, but they invented distillation, crystallization, and the experimental method. They mapped acids and bases. They created gunpowder while seeking immortality. Modern chemistry was born from their epic failure.

The AGI seekers won't build consciousness. But they've already built:

- Systems that can read and summarize at superhuman speed

- Models that translate between any languages

- Tools that generate code from descriptions

- APIs that cost pennies to automate what used to take hours

We needed the AGI dream to fund the ML revolution. No government would spend $560 billion on "better search" or "automated customer service." But promise digital consciousness? Promise to win the intelligence race? The checkbooks open.

My advice: ignore the consciousness narrative, use the tools being discovered.

The revolution isn't artificial intelligence. It's augmented human intelligence at scale.