Ed Zitron's viral takedown of AI economics makes one genuinely concerning point: companies are spending $560B to generate $35B in revenue. That 16:1 ratio is unprecedented. He's absolutely right to highlight it.

But that's where his analysis peaks. After that, he swallows the hype he claims to hate.

The cost story is more complex

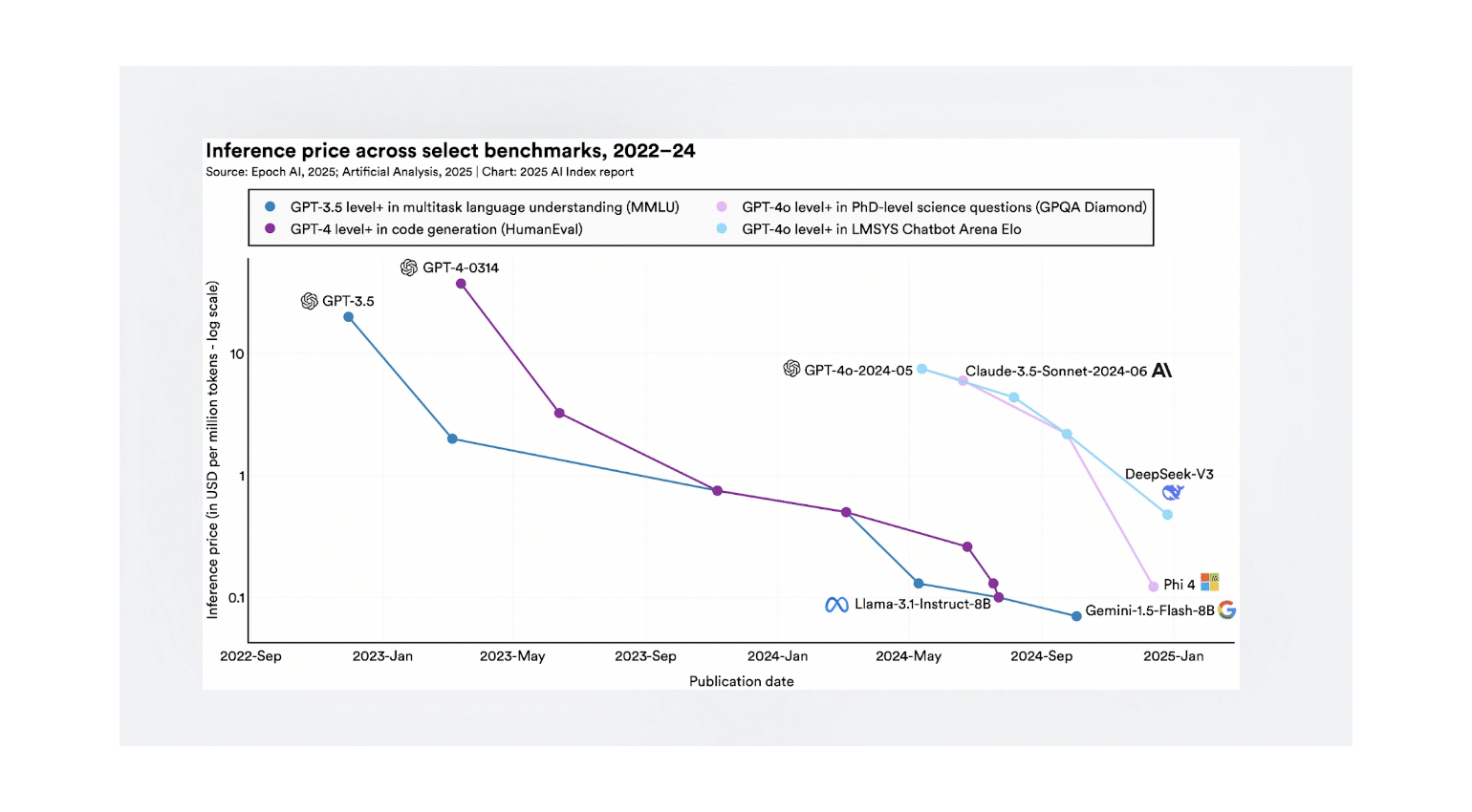

Yes, frontier models are expensive. They're also new. GPT-3.5 inference costs dropped 280× in under two years. Older frontier models already price at pennies per million tokens. Blackwell delivers 4× Hopper throughput on Llama-70B. This is a standard tech cost curve.

The Cursor pricing drama? A startup moved from flat-rate to usage-based pricing. Some users complained. Without retention data, calling that "proof the model is broken" is just grabbing a story that fits.

The irony of fighting phantom agents

Zitron spends thousands of words debunking AGI hype - then judges everything by AGI standards. He dismisses "agents" because they're not fully autonomous systems.

Companies are shipping workflow tools, CRM automation, code helpers. Real customers pay for them. But Zitron can't see the value because they're not self-directed. It's like dismissing cars because they're not self-driving.

Infrastructure always looks excessive at first

That $560B isn't just spending - it's a moat for the platforms. Good luck competing when entry costs are half a trillion. Microsoft's $3B real AI revenue on $80B capex looks brutal - but AWS had the same optics in 2010, then flipped to 40% margin when usage caught up. The infrastructure IS the competitive advantage.

Platform risk? Every major tech firm starts under someone else’s thumb. Customer concentration? Intel spent years living off a few OEMs. These aren’t new problems - they’re just normal platform adolescence.

The 'just a wrapper' fallacy

Zitron lists a handful of obvious use cases - chatbots, search, code generation - then declares that's all LLMs can do.

I'm shipping products that don't fit any of his categories. A mobile app that aggregates usage patterns into market insights. A system processing 5M social posts daily to surface breaking news. Brand intelligence tools that actually work. These aren't "wrappers" any more than Google is a "web crawler wrapper."

Builders create different moats. The moat isn't the LLM - it's the data pipeline, the user experience, the business logic. Calling these "LLM wrappers" is like calling a person a "skeleton wrapper." Technically true, totally misses the point.

The 16:1 problem

That ratio is genuinely alarming. Maybe it comes down as costs drop and revenue scales. Maybe it signals something fundamentally broken.

I'm betting this normalizes. He's betting it won't - and padding his case with Reddit anecdotes.

What’s striking is how even many skeptics get trapped by the AGI framing. Zitron spends 14,000 words torching sci-fi, then uses it as his benchmark. Meanwhile, the 'boring' stuff is already working.